A high-quality website is easy to read, easy to navigate, responsive, full of useful content, and very crawlable. But just what is website crawlability? How would you know if your site is sufficiently crawlable or if there are any crawl errors, especially on important pages? Is there a tool test checker or URL inspection tool that can be used?

Simply defined, if a website is crawlable, Google search results or search engines have no problem finding it, analyzing it, or sharing its pages. Crawlability is vital because the higher your website ranks on search engine results pages (SERP), the more people will become aware of your business website’s pages, making your SEO strategy in technical SEO successful.

TL;DR – To check if your website is crawlable, use the Google Search Console URL Inspection Tool to see if your URL is indexable. Additionally, the free version of Screaming Frog SEO spider can audit your site’s URL crawlability. Regular SEO audits are necessary due to evolving best practices.

Table of Contents

Understanding Website Crawlability

Every search engine possesses crawlers and automatic bots that traverse the entire internet while looking for websites. Web crawlers, by the way, are sometimes known as spider bots or spiders.

When a search engine crawler lands on a site, it starts going through its contents in search of active links or backlinks. Yes, every moment of every day, crawlers are out there online, looking for links. And they save HTML copies of the links they find within their search engine’s index pages, which is its gigantic database of websites.

That way, the search engine’s algorithm can rank all those links. And those rankings will be available to anyone who uses that search engine.

The term “crawlability,” then, refers to how simple or complicated it is for search bots to reach your website, navigate it, make sense of it, and index its pages. Indeed, in order for bots to index your web pages correctly, those pages must each have a clear purpose and meaning.

For instance, if you own a house cleaning business, each of your web pages should, in obvious ways, relate to house cleaning. All of their descriptions, images, photo captions, and videos should explain your cleaning services in an easily comprehensible manner.

Improving Your Website’s Crawlability

Search bots visit websites at different frequencies. A site might get crawled every day or once every several months. This crawling rate depends on various factors, including how popular and “important” a site is. For example, an international corporation’s site would likely be crawled more often than the site of a small local business.

Fortunately, anyone can take concrete steps to make a website more attractive to search engine crawlers. Here are just a few examples:

1. Updating Pages

Be sure to change, add to, and update your site’s content as often as you can. Search bots are intrigued by new content, and they tend to crawl a site more often if there’s consistently new material to explore.

Moreover, original and relevant content is at least as important as new content. If crawlers think that your site’s material is off-topic or too similar to items found elsewhere on the internet, they might not index your web pages. On the other hand, fresh and pertinent content always appeals to search engines bots.

Also, whenever you update your website’s content, update your keywords as well. In short, keyword optimization is key. This process involves placing popular and relevant terms throughout your site at optimal rates. Of course, a search engine optimization (SEO) professional could help you figure out which keywords to use and where to position them.

2. Making an XML Sitemap

Producing a sitemap is another way to make your website more crawler-friendly. A sitemap is a chart — generally in the form of an XML document or text file — that lists all of your site’s pages. Plus, it tells bots how your site is structured: the precise organization of your web pages and all the connections between them. And it lets crawlers know which pages are the most important and information-rich.

In short, if your website contains a detailed sitemap, crawlers will have an easier time getting around. And you’ll help search engines to catalog your content more accurately. On top of that, your map will tell bots when you have new content and where it can be found.

Furthermore, send your sitemap to Google Search Console so that Google’s bots can find their way around your site faster. And, whenever you add, delete, or restructure your web pages, modify your sitemap and resend it to Google Search Console.

3. Increasing Your Site’s Speed

If your web page load time is slow, crawlers might give up and leave your website before they get a chance to index everything. Thus, it helps to speed up your site, and there are different ways of doing so. Here are just a few examples:

- Find a faster web host or web server.

- Take advantage of cloud hosting services to increase your site’s overall capacity.

- Make sure all of your images are the correct size, especially when it comes to mobile browsing. And compress any images that can be compressed.

- Minimize the number of plugins, widgets, and advertisements on your site.

4. Fixing the Links

Increasing and rearranging your website’s internal links can be quite beneficial. That is, every web page ought to connect to at least one other page. Make your internal links easy to find, too.

Keep an eye out for broken links as well. A broken link is one that leads nowhere, either because you’ve removed a certain web page or because the URL is misspelled. Either way, spider bots definitely don’t appreciate broken links.

5. Using a Robots.txt File

You could also set parameters on how search engine bots crawl your site. The file that shares those coded instructions — which are called directives — with crawlers is a robots.txt file.

Imagine you have certain web pages with duplicate, unoriginal, out-of-date, or otherwise low-quality content. And unfortunately, you don’t have the time right now to update those pages.

In such a case, your robots.txt file could tell search engine crawlers to stay away from those particular pages. As a result, they wouldn’t harm your search engine rankings.

How to Assess Your Site’s Crawlability

At this point, you might be wondering: Just how crawlable is my website? How could I evaluate it?

Luckily, there are convenient SEO tools that you could use to assess your site’s crawlability. And, if you discover that it’s not sufficient, you could look into ways of improving it.

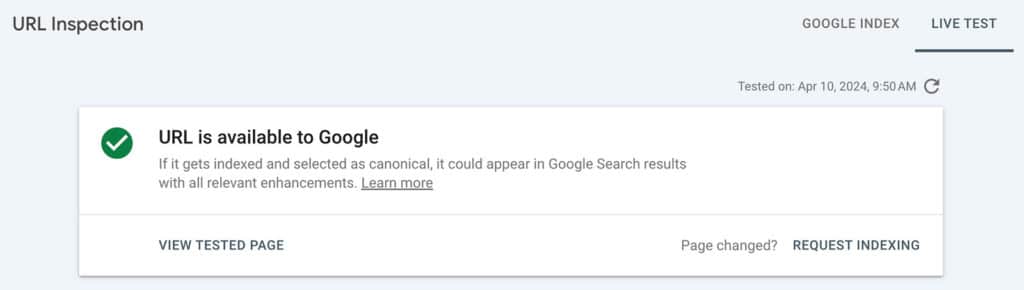

For example, you could submit your URLs to Google Search Console (which is mentioned above). In turn, this free Google service would tell you whether those URLs are able to show up in Google Search, whether Google can crawl and index them satisfactorily, and whether they have any mobile usability problems. To use the tool, add your URL to the Inspection Tool >> pressing Test Live URL to test the page. Your URL is indexable if you receive a “URL is available to Google” message. If it is not indexable, you will receive a warning/error.

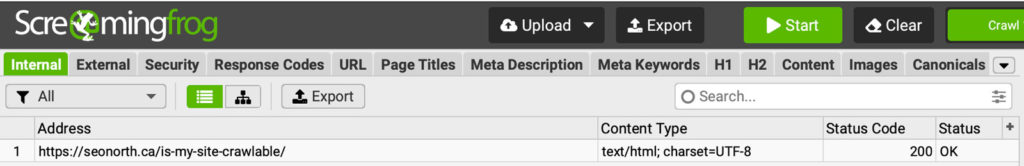

Another excellent SEO auditing test tool is Screaming Frog, which offers a free version you can download. Screaming Frog will run its own crawlers through your website, no matter your site’s size or complexity. Afterward, it will provide you with a full and detailed assessment of your site — your URL hierarchy and structure in particular. Your pages need to have a status code of 200/OK to be indexable. Once you’ve made all the necessary changes, every Googlebot and every other crawler should love your site!

In the end, SEO is a field that’s constantly changing, and website best practices are likewise always evolving. Therefore, it’s crucial to keep auditing and updating your site on a regular basis. If you do so, you might be surprised by how far your site climbs up the results pages of major search engines.

Finally, for the best SEO practices currently available, it makes sense to turn to experts. And, for the most crawler-friendly website possible — and for the biggest increases in web traffic possible — please email SEO North, an SEO expert and webmaster, for help at any time with your digital marketing needs.

FAQ

How can I ensure that my website is crawlable by search engines?