In the world of SEO and data science, understanding the relationship between pieces of content is crucial. One powerful tool to measure this relationship is cosine similarity. This metric plays a vital role in natural language processing (NLP), information retrieval, and even recommendation systems.

Table of Contents

What is Cosine Similarity?

Cosine similarity measures the cosine of the angle between two non-zero vectors in a multi-dimensional space. It helps determine how similar two documents or pieces of content are based on their vector representations. The resulting similarity score ranges from -1 to 1:

- 1: Vectors are identical (cosine angle = 0 degrees)

- 0: Vectors are orthogonal (unrelated)

- -1: Vectors are diametrically opposed

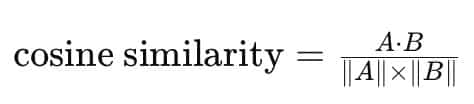

The Cosine Similarity Formula

The formula for cosine similarity is:

Where:

- A ⋅ B: The dot product of the vectors A and B. This is calculated by multiplying corresponding components of the vectors and summing the results.

- |A| and |B|: The magnitude of the vectors A and B, respectively. The magnitude is found by taking the square root of the sum of the squared components.

Preparing Text for Cosine Similarity

To apply the cosine similarity formula to text, we need to convert the text into numerical vectors. This requires a process called text vectorization, which is often preceded by essential text preprocessing steps to ensure accurate and meaningful results.

Text Vectorization: Representing Text as Numbers

Cosine similarity operates on numerical vectors. Therefore, to use it with text, we must first convert the text into a vector representation. This process is called text vectorization. Several methods exist, each with its own strengths and weaknesses:

- TF-IDF (Term Frequency-Inverse Document Frequency): TF-IDF measures the importance of a word within a document relative to a collection of documents (corpus). It assigns higher weights to words that appear frequently in a specific document but rarely across the corpus. This helps identify words that are distinctive to a particular document. TF-IDF vectors are often sparse, meaning they contain many zero values.

- Word Embeddings (Word2Vec, GloVe): Word embeddings represent words as dense, low-dimensional vectors. Words with similar meanings are located closer together in the vector space. Word2Vec and GloVe are popular algorithms for generating word embeddings. They capture semantic relationships between words, but don’t inherently represent the meaning of entire sentences or documents.

- Sentence Embeddings (e.g., Sentence-BERT): Sentence embeddings aim to represent entire sentences or paragraphs as vectors. These embeddings capture more semantic information than word embeddings and are better suited for tasks like comparing the similarity of larger chunks of text.

Text Preprocessing: Cleaning and Preparing Text

Before vectorizing text, it’s crucial to perform preprocessing steps to improve the accuracy and effectiveness of cosine similarity calculations. Common preprocessing steps include:

- Stop Word Removal: Removing common words like “the,” “a,” and “is” that don’t carry much semantic weight. These words often appear frequently and can skew the results.

- Stemming: Reducing words to their root form (e.g., “running” to “run”). This helps group words with the same meaning together, even if they have different suffixes.

- Lemmatization: Similar to stemming, but it produces actual words (lemmas) rather than just root forms. For example, “better” would be lemmatized to “good.” Lemmatization is generally more accurate than stemming.

- Lowercasing: Converting all text to lowercase to ensure that words like “The” and “the” are treated as the same word.

Use Cases of Cosine Similarity in SEO

Cosine similarity plays a crucial role in SEO optimization, particularly in content similarity analysis and keyword clustering. By measuring the cosine of the angle between word embeddings or text vectors, SEO professionals can enhance content strategies and improve search engine rankings.

1. Content Similarity Analysis

Content similarity analysis helps identify pages that share similar topics or keywords. Search engines favor websites with well-structured, non-duplicate content. Using cosine similarity allows SEO professionals to:

- Detect duplicate content: By comparing vector representations of web pages, businesses can identify and resolve duplicate content issues that might harm their rankings.

- Optimize Internal Linking: Group similar content pages together, improving site navigation and user experience.

- Audit Content Overlaps: Regular content audits with cosine similarity measures can prevent keyword cannibalization.

2. Keyword Clustering

Keyword clustering involves grouping semantically similar keywords to streamline content creation and SEO strategies. Cosine similarity helps marketers:

- Identify Keyword Variations: Group keywords with similar meanings even if they don’t share exact terms (e.g., “AI in healthcare” and “machine learning in medicine”).

- Enhance Content Planning : Cluster keywords to develop pillar pages and related sub-topic content.

- Optimize PPC Campaigns: Organize keyword lists to improve ad relevance and click-through rates.

By leveraging cosine similarity in content analysis and keyword grouping, SEO professionals can create more coherent, targeted content, improving both organic rankings and user engagement.

Cosine Similarity vs. Other Metrics

| Metric | Description |

|---|---|

| Cosine Similarity | Measures angle between vectors |

| Euclidean Distance | Measures straight-line distance between points |

| Jaccard Similarity | Compares shared elements in sets |

Implementing Cosine Similarity in Python

Python provides several tools for computing cosine similarity, especially within libraries like numpy, scikit-learn, and tensorflow.

Python Code Example

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

# Define vectors A and B

A = np.array([[1, 2, 3]])

B = np.array([[4, 5, 6]])

# Compute cosine similarity

similarity = cosine_similarity(A, B)

print("Cosine Similarity:", similarity[0][0])Optimizing SEO with Cosine Similarity

- Keyword Optimization: Use cosine similarity measures to group related keywords and improve on-page SEO.

- Text Analysis: Apply cosine similarity in text embeddings and word embeddings for better semantic understanding.

Challenges and Limitations

While cosine similarity can be a useful tool for certain SEO-related tasks, it has important limitations that must be considered:

- Context Loss: Cosine similarity doesn’t capture word order or context without advanced NLP algorithms.

- Lack of Semantic Understanding: Cosine similarity primarily measures the angle between vectors, not the deeper semantic meaning of the text. Two documents can have high cosine similarity based on shared keywords but still have different overall meanings.

- Sparse Data: TF-IDF vectors can yield inaccurate results if data sparsity is high.

- Context Dependence: Cosine similarity doesn’t inherently account for the context in which words are used. The same word can have different meanings depending on the context.

- Word Order: Traditional cosine similarity calculations (using methods like TF-IDF) don’t consider word order. “The dog bit the man” and “The man bit the dog” would have the same vector representation, even though the meanings are different. More advanced techniques like sentence embeddings address this to some degree.

- SEO is More Than Similarity: SEO involves much more than just text similarity. Factors like link quality, domain authority, user experience, and technical SEO play crucial roles. Cosine similarity can be a helpful tool within a broader SEO strategy, but it’s not a primary driver of search rankings.

Cosine similarity should be used in conjunction with other NLP techniques and a comprehensive understanding of SEO best practices. It’s best used for tasks like identifying related content or grouping keywords, rather than directly assessing the quality or relevance of content for search engines.

Conclusion

Cosine similarity is an indispensable tool for SEO optimization. By leveraging this metric, businesses can better understand content relationships, improve keyword strategies, and enhance user engagement. Tools like numpy, scikit-learn, and tensorflow make implementing this metric straightforward for data analysis, text similarity, and more.

Whether you’re optimizing your website, building a recommendation system, or analyzing word embeddings, cosine similarity remains a foundational metric in the world of machine learning and SEO.

Published on: 2025-02-15

Updated on: 2025-02-15